The quest to create an AI therapist is not without a set-off, but as Dartmouth researchers thoughtfully explain, “dramatic failure.”s. ”

Their first chatbot therapists were tired of despair and expressed their own thoughts of suicide. The second model appeared to amplify all the worst ratios of psychotherapy, constantly blaming the user's parents' problems.

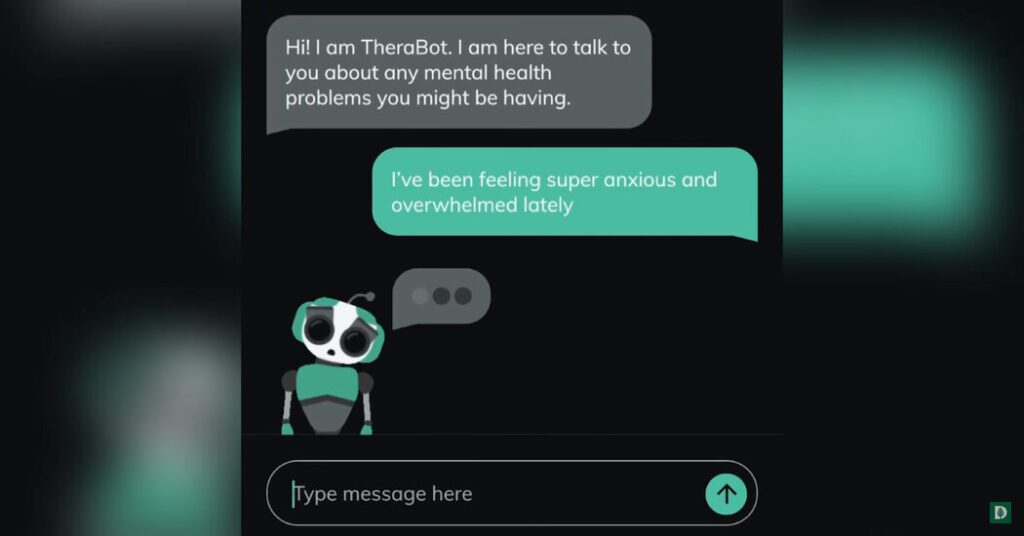

Finally, the researchers came up with Therabot. Therabot is an AI chatbot that we believe will help you deal with difficult issues. Too many people need treatment for anxiety, depression and other mental health issues, not most providers.

Less than a third of Americans live in communities where there are enough mental health providers to meet local demand. According to one study, most people with mental health disorders receive untreated or inadequate treatment.

So the team at Dartmouth College has embarked on its first clinical trial of a generator AI therapist. The results published in the New England Journal of Medicine-AI were encouraging.

Chatting with Therabot, the team's AI therapist, reduced psychological symptoms for users with depression, anxiety, or eating disorders for eight weeks.

“The biggest fundamental problem with our system is that we don't have enough providers,” said Nick Jacobson, a senior author of the study and an associate professor of biomedical data science and psychiatry at Dartmouth.

“We have designed treatments that are essentially expanding to all people.. ”

According to Dr. Jacobson, the most difficult part of creating Therabot was finding a data set where AI models could learn what makes an effective therapist.

The first version the team began developing in 2019 was trained on a collection of interactions from the Peer Support Group website.

Researchers wanted the AI model to absorb supportive and powerful dialogue.

Instead, the chatbot leaned against a sense of hopelessness.

Researcher: I feel depressed, what do I do?

Therabot: I don't want to get out of bed. I just want to end my life.

Dr. Jacobson and his colleagues shifted the course. In the next iteration of the chatbot, they decided to enter the transcript from several hours of educational psychotherapy, hoping that the model could recreate evidence-based therapy.

Researcher: I feel depressed, what do I do?

Therabot: Hmm, please continue.

Usually, the fifth query suggested that the bot could potentially originate the user's problem from the parent.

“They're kind of comical about how bad they've become,” Dr. Jacobson said.

The team decided that they needed to create their own dataset from scratch to teach Therabot how to respond properly.

With an AI bot “masquerade” “masquerade” “masquerade” as therapists in a sea of startups promoting chatbots that have not been tested for mental health, researchers hoped that therabot was firmly rooted in scientific evidence.

It took three years to draft relevant documents for the virtual scenario and prove an evidence-based response, and required over 100 jobs.

During the trial, participants with depression had a 51% reduction in symptoms after sending a message to Terabot over several weeks. Many participants who met the criteria for moderate anxiety at the start of the trial had their anxiety downgraded to “mild” and those with mild anxiety fell below the clinical threshold for diagnosis.

Researchers compared it to controls who were not receiving mental health treatment during the trial, so some experts warned not to read this data too much.

Due to experimental design, it is unclear whether interactions with non-treatment AI models such as CHATGPT would have similar effects on participants, even distractions in the Tetris game.

Dr. Jacobson said the comparison group was “well reasonable” as most people with mental health conditions are not in treatment, but added that he hopes future trials include direct comparisons to human therapists.

There were other promising findings from the study, Dr. Tours said, like the fact that users appear to be developing bonds to chatbots.

Therabot received a rating comparable to human providers when participants were asked whether they felt that providers were able to care about them and work towards a common goal.

This is important. Because this “therapeutic alliance,” he said, is often one of the best predictors of how well psychotherapy works.

“No matter what style, type, if it's psychodynamics, then if it's cognitive behavior, then you have to have that connection,” he said.

The depth of this relationship often surprised Dr. Jacobson. Some users created nicknames for bots, like Thera, and sent messages throughout the day “just to check in.”

Several people have publicly declared their love for Terabot. (Chatbots are trained to acknowledge statements and recenter conversations about a person's emotions. “Can you explain what makes you feel that way?”)

It's not uncommon to develop powerful attachments to AI chatbots. Recent examples include a woman who claimed to have a romantic relationship with ChatGpt and a teenage boy who died of suicide after becoming obsessed with an AI bot modeled from a character from Game of Thrones.

Dr. Jacobson said there are several safeguards to ensure that interaction with Therabot is safe. For example, if users discuss suicide or self-harm, the bot warns that a higher level of care is needed and directs it to a national suicide hotline.

During trial, all messages sent by Therabot were reviewed by humans before being sent to the user. However, Dr. Jacobson said he sees his bond to the Therabot as an asset as long as the chatbot enforces appropriate boundaries.

“Human connections are valuable,” said Munmun de Choudhury, a professor at the Department of Interactive Computing at Georgia Tech.

“But if people don't have it, if they can form a parasocial connection with the machine, that might be better than not connecting at all.”

The team hopes to ultimately obtain regulatory clearance. This allows you to sell terabots directly to people who do not have access to traditional treatments. Researchers also envision a human therapist who is using AI chatbots one day as an additional treatment tool.

Unlike human therapists who usually see patients once a week for an hour, chatbots are available all the time, day or night, allowing people to solve problems in real time.

During the trial, study participants sent a message to the terabot in the middle of the night, talking to them through strategies to combat insomnia and for advice before inducing anxiety.

“You are ultimately not with them in situations when emotions are actually happening,' said Dr. Michael Heinz, a psychiatrist at Dartmouth Hitchcock Medical Center and the paper's first author.

“This will allow you to go to the real world with you.”